Introduction

A few weeks ago I suffered a failure of my Cisco 3750x core switch. This was a rather eye opening experience for me as my entire house moves through this switch, one way or another. Thankfully I was able to partially restore services but due to the advanced / modern networking I use (the main issue being the use of 10Gb fiber) my options were either to reconfigure all of my servers or tough it out until I could get a replacement switch. I spent a lot of this downtime planning on what I could do to improve this predicament and how I could handle this better if it happened again in the future.

Honestly the internet is littered with stories such as this and I’m sure they will continue to come. If I can stress anything it would be to look over your environment and know exactly what would be affected if one device died and what your plan to fix it is. Since this is only my home network and not a business environment I did not really take having a contingency plan seriously. Maybe you are fine with an outage until you can get replacement parts. Maybe your significant other will kill you if your home network is down for days. While this was not the end of the world for me I certainly did not enjoy this disruption and would like to limit downtime in the future. At the very least this experience has triggered a fun thought experiment for what else can go wrong and how I can remedy it.

Something’s wrong, I can feel smell it

I awoke on a Saturday morning, made my coffee, and sat down on the couch to begin my normal wake up routine. I happened to look at my cell phone and noticed that it was not connected to Wi-Fi which was odd. I poked my head down the hallway and noticed that my access point did not have its normal blue glow anymore. At that point I figured one of two things was going on, either my access point was dead or my PoE switch was dead. As I walked toward the basement door the smell hit me. The kind of smell that smells… smelly. The smell of an electronic device that had let the magic smoke that powered it escape. Once I was in front of my rack I was not surprised to see that my Cisco 3750x had no more of those nice blinking lights. What I was not expecting to see when I walked around back was that the PSU was still showing that the AC input and its status was OK.

When I had bought this switch it only came with one PSU. I had planned to buy the redundant PSU down the road but never got around to it. My backup plan was to see if I could borrow a spare PSU from work for a few days should mine ever fail. Although I had never really planned on the switch failing I knew I had a bunch of 3500 series Cisco switches from when I was studying for my CCNA that I could probably make work if I needed to. That may have worked before I moved a bunch of stuff over to 10Gb fiber but I clearly did not have this thought since then.

So here I stood, looking at this switch that was not running with a PSU telling me it was fine. Since I had no spare PSU to test I wasn’t entirely sure if the switch was dead or if the PSU was actually faulty but I was leaning toward the former. I quickly realized that I could restore most of my server rack and main PC (which I thankfully ran fiber to) by moving the fiber link that runs from my router to my Cisco switch directly into my Celestica D2020 instead.

Now that I had partial service restored the gravity of the situation started to hit me. My access point was power over Ethernet and I did not have a PoE injector (I have a PoE switch, why would I need one of those?) so there would be no restoring that until I had a new switch. I have Hue lights in my bedroom with a wake up routine for weekdays that I would normally control with my phone. No Wi-Fi means I no longer hove control of those lights. On Monday they would turn themselves on and the only way they were going to turn back off is by me manually flipping the switch. Since they default to be on when power is restored they went back to functioning as normal lights. Thankfully in the rest of my rooms with Hue lights I have dimmer switches that can still communicate with the Hue bridge and function properly.

As a “cord cutter” with no cable TV my living room was now dead. Since my Cisco switch was the only source of copper Ethernet ports anything that was hardwired no longer functioned, and even if it had Wi-Fi capabilities I had no way of restoring Wi-Fi as previously mentioned. At least I still had access to streaming services and YouTube on my PC. I have a few jails on my TrueNAS server that have dedicated 1Gb connections which were now dead as well. My management network is all on copper too. That includes all of my server iDRAC connections, and my ESXi host management connection. That means I no longer had access to configure any virtual machines. Even my segregated LAB network for my desktop was down since that connection was done over copper.

Honestly these are all first world problems but when most of your life revolves around technology (and if you are actually reading this you can probably relate) you cant help but feel slightly broken.

Copper and fiber don’t mix

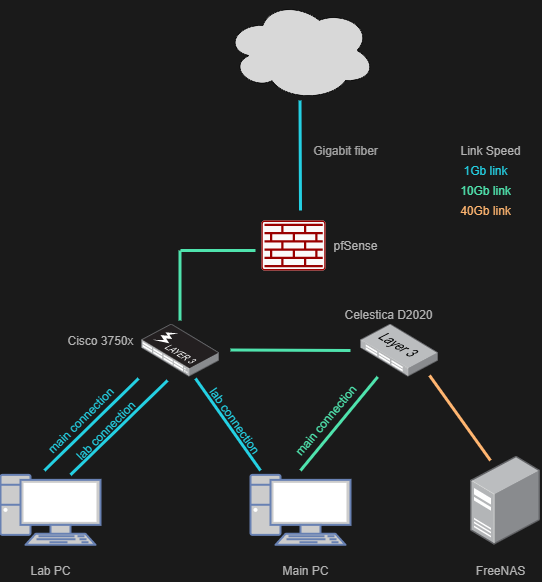

Allow me to borrow a previous network diagram I had created to explain some things.

You can see that I have fiber coming out of my pfSense machine and into my Cisco switch. From there I have more fiber feeding into my D2020. Losing the 3750x cut off my entire network from the router. As I said above it was easy enough to move the link that was connected to the 3750x to the port on the D2020 that was setup as a trunk and had all of the VLANs already configured. That restored service to anything that was connected to the D2020, being my main PC and most of my servers. I started thinking about how I could reuse one of my old Cisco switches to restore Ethernet for everything else and realized I had an issue. My old Cisco switches only have copper interfaces. The D2020 is all SFP+ and QFSP ports. I have no way of connecting these two switches together. It seems having a SFP+ -> RJ45 transceiver might be a good idea now, although my old switches are 100mb so I need to be sure that it could negotiate down that far.

The other thought I had was connecting the temporary Cisco switch to pfSesne with copper, and leaving the fiber connected to the D2020. The issue here is that I have some copper ports and some fiber ports configured for the same VLAN. For example, I have a Raspberry Pi running Pi-hole for my primary DNS server. The Raspberry Pi obviously uses copper and I keep this on my ‘server’ subnet. I also have a redundant Pi-hole instance running in a virtual machine which is connected with fiber. As far as I know, you cant tell a router that the same subnet exists on two different ports, because how would it know where to route traffic? Technically I think it is possible to bridge the ports in pfSense, but that presents other issues. I also didn’t want to completely reconfigure my network and have to undo everything when the new switch arrived. I had the thought of creating new VLANs for the copper stuff but again I didn’t want to have to undo everything later.

Ordering a switch and cleaning up

Since I had service restored on my main PC it was time to visit Ebay. I was still not sure if the switch was dead or if it was really a PSU issue. If it was a PSU issue I would surely be ordering two to avoid this situation again. After looking at prices I quickly realized that I could get a new switch with two PSUs for about $100 more than just ordering the PSUs themselves. The worst case scenario was getting a spare switch if it turned out to the a PSU problem. The seller who had these switches was also located in the same state as me so in my mind I would order on a Saturday, it would ship Monday, and I would have it Tuesday. Boy that was a dumb thought, especially when the listing said the delivery time frame started at the end of the week which I probably should have noted before ordering. For whatever reason it took a week to get this switch to FedEx so I ended up being down for a week and a half.

The seller of this switch had sold hundreds of them and had a picture of one switch in the description. I figured it was an e-waste reseller since they had sold so many and didn’t really think anything of it. I know from previously buying switches on Ebay that you are better off finding someone who has pictures of exactly what you are getting, and in the Cisco world finding someone who has the boot output or at the very least IOS version listed is the best way to go. Unfortunately I was trying to put shipping speed above all of that so I ignored my usual rules.

Cleanup, documentation, and configs

I knew I had configuration files backed up so restoring my network should be as easy as a copy / paste. In theory. Once I looked in my backup config folder I saw that my last backup for my Cisco switch was in 2019. A lot of stuff changed in the three years since I had last saved this file but at least I had a good starting point. Thankfully I run NetBox in a virtual machine to keep track of my server rack and the connections so I could quickly grab the information from there and fill in my Cisco config. In theory. If you guessed that my NetBox database was out of date too you would be correct. At some point I stopped adding new cables and really just started using NetBox to keep track of my in use IP addresses. I was fairly certain that all of the information that I did have in NetBox was correct but since I really had nothing better to do I figured it was time for an audit and time to bring everything in NetBox back up to date. Thankfully the one thing I have been consistent with is keeping my cabling clean so this did not take long to accomplish.

With the audit done I went back and updated NetBox and took the switch configuration I had and updated it as best as I could. Everything looked OK so my hope was after pasting it in everything would come up just as it should.

The “new” switch

Finally almost a week and a half after my switch burned up I received the delivery notification for my new switch. By this point I had a game plan. Before I did anything I would power this switch up to verify functionality. If there were any major issues I would immediately contact the seller. After I knew it was OK I would take one of the power supplies and install it in my old switch just to make sure it wasn’t a bad PSU. If it was a bad PSU my old switch would be reinstalled so I wouldn’t need to worry about the configuration, otherwise this new switch was getting installed.

The packaging for this switch was great. As I started unwrapping the bag that the switch was in I noticed how beat up the bottom of the switch was. I know that I’m getting a used product but was the first sign that perhaps this switch was not well taken care of. I told myself to forget it and continued unwrapping. A surge of anger hit me when I got to the front of the switch and 3/4 of the face plate was missing. Technically this was only cosmetic damage but the port numbers are on this face plate, and now I have hunks of exposed plastic where the port status lights are. I wanted nothing more than to send this switch back but I had just spent over a week waiting for it. I decided to move on with my plan of testing it and my old switch, and at this point I was really hoping that my old switch just needed a new PSU.

I pulled out my laptop and connected to the serial port so I could watch the switch boot. Once it got to the point of loading IOS it posted an error message saying that the image file did not pass verification and was either corrupt or invalid. It did however continue to boot and successfully load but I now had serious concerns to why that error came up. The other thing I was not pleased about was that this switch was running IOS 12.2. My old switch was on IOS 15 and I would like this one to be as well. Again I should not have abandoned my rules for buying a switch but I wanted one as quickly as possible. So many mistakes were made by me in this regard.

Since the switch booted and otherwise seemed OK I quickly powered it off and put one of the power supplies in my old switch hoping that it would come back to life. Unfortunately what it confirmed was that my old switch was dead. I had actually opened up the case of my old switch to take a peek inside and look for any obvious problems. I did not find any but I did learn that these switches are not rocket science to disassemble. I thought about it a bit and thought that since my old switch was officially dead that there was no reason I couldn’t pull parts off of it, like my pristine face plate for example.

At this point I had a choice, either fight with this seller and try to get a switch in better condition, or I could just rip it apart and fix it myself. I still did not test this switch thoroughly but I decided to do as the kids do and say YOLO (they still say that, right?) and try to bring this thing up to my standards.

The first thing I wanted to do was get IOS 15 on this switch. I knew that Cisco moved to a licensing model with the new IOS versions and was a bit worried since I have not had to deal with it yet. My plan was to backup what software was on the switch just in case I was making a mistake, then try to load IOS 15. Technically you need to have a Cisco account to download the software files, but Cisco provides the exact filename and the checksum for the files. Usually with a little google searching you can find exactly what you are looking for. After getting IOS 15 loaded the switch no longer had the error about having an invalid / corrupt image so that was a huge relief.

Since I had the software updated I loaded my config and rebooted the switch to make sure everything came back in order. All looked well, so it was time to swap the face plate. I took my time with this since the old plastic was brittle and there are only small tabs holding the face plate to the switch, and everything went well.

Happy ever after and future plans

Happily once I got the switch racked and powered up everything came back to life as expected. OK, I had to issue a few ‘no shut’ commands on some ports but otherwise I did not need any other config changes.

During this whole experience I kept thinking back to when I was studying for my CCNA, specifically some training I took from CBT Nuggets. Jeremy Cioara drilled into my head “Two is one, one is none” and never has that been more clear. This switch failing was probably the worst case scenario for me. If my router fails I have spare servers I can put in place. If my D2020 fails it would only take a few port configurations on the 3750x to bring back my entire setup at 1Gb. I’ll be ordering another 3750x (from a different seller this time) as a cold spare since it is the most difficult thing for me to replace. I could rack it and have it be redundant from the start, but my wallet already hates my power bill so I don’t think that will be necessary.

Now that I’ve had this failure I’m thinking of other things I can improve around my home network. For example, I had two Pi-hole servers running so DNS never failed, but I learned that Linux servers will use the first DNS entry and wait for a timeout before moving to the second. On my to-do list now is setup a floating IP so one DNS server going down is seamless to my servers. I’ve also been extremely bad about backups. I know the 3-2-1 rule exists, but I’m mainly relying on TrueNAS and snapshots. I’ve realized that there is no reason I cant have a Syncthing virtual machine running so I can at least have my more critical files on both my file server and a raid 5 SSD array on my ESXi host. You know, things like those config files that I don’t keep up to date anyway ;)